React SEO Strategies and Best Practices

While React is often lauded for making front-end development more efficient, this popular library can be problematic for search engines.

In this article, Toptal data visualization engineer Vineet Markan examines why React is challenging for SEO and outlines what software engineers can do to improve the search rankings of React websites.

While React is often lauded for making front-end development more efficient, this popular library can be problematic for search engines.

In this article, Toptal data visualization engineer Vineet Markan examines why React is challenging for SEO and outlines what software engineers can do to improve the search rankings of React websites.

Vineet specializes in building data visualization interfaces and has used React extensively in his projects.

Editor’s note: This article was updated on 10/25/22 by our editorial team. It has been modified to include recent sources and to align with our current editorial standards.

React was developed to create interactive UIs that are declarative, modular, and cross-platform. Today, it is one of the more popular—if not the most popular—JavaScript frameworks for writing performant front-end applications. Initially developed to write Single Page Applications (SPAs), React is now used to create full-fledged websites and mobile applications. However, the same factors and features that led to its popularity are causing a number of React SEO challenges.

If you have extensive experience in conventional web development and move to React, you will notice an increasing amount of your HTML and CSS code moving into JavaScript. This is because React doesn’t recommend directly creating or updating UI elements, but instead recommends describing the “state” of the UI. React will then update the DOM to match the state in the most efficient way.

As a result, all the changes to the UI or DOM must be made via React’s engine. Although convenient for developers, this may mean longer load times for users and more work for search engines to find and index the content, causing potential issues for SEO with React webpages.

In this article, we will address challenges faced while building SEO-performant React apps and websites, and we will outline several strategies used to overcome them.

How Google Crawls and Indexes Webpages

Since Google receives over 90% of all online searches it’s worthwhile to take a closer look at its crawling and indexing process.

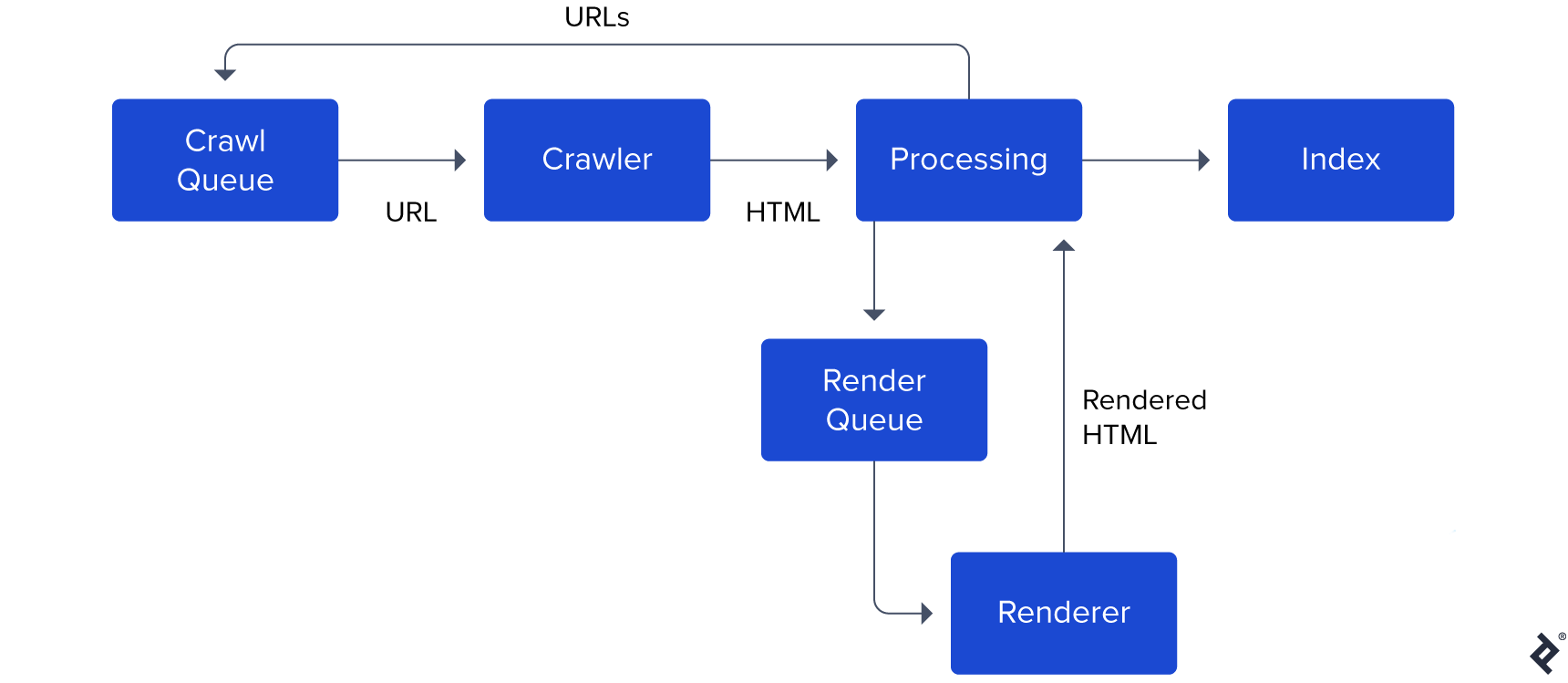

This snapshot taken from Google’s documentation can help us. (Note: This is a simplified block diagram. The actual Googlebot is far more sophisticated.)

Google Indexing Steps:

- Googlebot maintains a crawl queue containing all the URLs it needs to crawl and index in the future.

- When the crawler is idle, it picks up the next URL in the queue, makes a request, and fetches the HTML.

- After parsing the HTML, Googlebot determines if it needs to fetch and execute JavaScript to render the content. If yes, the URL is added to a render queue.

- Later, the renderer fetches and executes JavaScript to render the page and sends the rendered HTML back to the processing unit.

- The processing unit extracts all the URLs’

<a> tagsmentioned on the webpage and adds them back to the crawl queue. - The content is added to Google’s index.

Notice that there is a clear distinction between the Processing stage that parses HTML and the Renderer stage that executes JavaScript. This distinction exists because executing JavaScript is expensive, given that Googlebots need to look at more than 130 trillion webpages. So when Googlebot crawls a webpage, it parses the HTML immediately and then queues the JavaScript to run later. Google’s documentation mentions that a page stays in the render queue for a few seconds, though it may be longer.

It is also worth mentioning the concept of crawl budget. Google’s crawling is limited by bandwidth, time, and availability of Googlebot instances. It allocates a specific budget or resources to index each website. If you are building a large content-heavy website with thousands of pages (e.g., an e-commerce website), and these pages use a lot of JavaScript to render the content, Google may not be able to read as much content from your website.

Note: You can read Google’s guidelines for managing your crawl budget here.

Why React SEO Remains Challenging

This brief overview of Googlebot’s crawling and indexing only scratches the surface. Software engineers should identify the potential issues faced by search engines trying to crawl and index React pages.

Here is a closer look at what makes React SEO challenging and what developers can do to address and overcome some of these challenges.

Empty First-pass Content

We know React applications rely heavily on JavaScript and often run into problems with search engines. This is because React employs an app shell model by default. The initial HTML does not contain any meaningful content, and a user or a bot must execute JavaScript to see the page’s actual content.

This approach means that Googlebot detects an empty page during the first pass. The content is seen by Google only when the page is rendered. When dealing with thousands of pages, this will delay the indexing of content.

Load Time and User Experience

Fetching, parsing, and executing JavaScript takes time. JavaScript may also need to make network calls to fetch the content, and the user may need to wait a while before they can view the requested information.

Google has laid out a set of core web vitals related to user experience, used in its ranking criteria. A longer load time may affect the user experience score, prompting Google to rank a site lower.

Page Metadata

Meta <meta> tags are helpful because they allow Google and social media websites to show appropriate titles, thumbnails, and descriptions for pages. But these websites rely on the <head> tag of the fetched webpage to get this information; they do not execute JavaScript for the target page.

React renders all the content, including meta tags, on the client. Since the app shell is the same for the entire website/application, it may be hard to adapt the metadata for individual pages and applications.

Sitemap

A sitemap is a file where you provide information about the pages, videos, and other files on your site and the relationships between them. Search engines like Google read this file to more intelligently crawl your site.

React does not have a built-in way to generate sitemaps. If you are using something like React Router to handle routing, you can find tools that can generate a sitemap, though it may require some effort.

Non-React SEO Considerations

These considerations are related to setting up good SEO practices in general.

- Have an optimal URL structure to give humans and search engines a sense of what to expect on the page.

- Optimize the robots.txt file to help search bots understand how to crawl pages on your website.

- Use a CDN to serve all the static assets like CSS, JS, fonts, etc., and use responsive images to reduce load times.

We can address many of these problems by using server-side rendering (SSR) or pre-rendering. We review these approaches below.

Enter Isomorphic React

The dictionary definition of isomorphic is “corresponding or similar in form.”

In React terms, this means that the server has a similar form to the client. In other words, you can reuse the same React components on the server and client.

This isomorphic approach enables the server to render the React app and send the rendered version to our users and search engines so they can view the content instantly while JavaScript loads and executes in the background.

Frameworks like Next.js or Gatsby have popularized this approach. We should note that isomorphic components can look substantially different from conventional React components. For example, they can include code that runs on the server instead of the client. They can even include API secrets (although server code is stripped out before being sent to the client).

Note that these frameworks abstract away a lot of complexity but also introduce an opinionated way of writing code. We will dig into the performance trade-offs in another section.

We will also do a matrix analysis to understand the relationship between render paths and website performance. But first, let’s review some basics of measuring website performance.

Metrics for Website Performance

Let’s examine some of the factors that search engines use to rank websites.

Apart from answering a user’s query quickly and accurately, Google believes that a good website should have the following attributes:

- Users should be able to access content without too much waiting time.

- It should become interactive to a user’s actions early.

- It should load quickly.

- It should not fetch unnecessary data or execute expensive code to prevent draining a user’s data or battery.

These features map roughly to the following metrics:

- Time to First Byte (TTFB): The time between clicking a link and the first bit of content coming in.

- Largest Contentful Paint (LCP): The time when the requested article becomes visible. Google recommends keeping this value under 2.5 seconds.

- Time to Interactive (TTI): The time at which a page becomes interactive (a user can scroll, click, etc.).

- Bundle Size: The total number of bytes downloaded and code executed before the page becomes fully visible and interactive.

We will revisit these metrics to better understand how various rendering paths may affect each one.

Next, let’s understand different render paths available to React developers.

Render Paths

We can render a React application in the browser or on the server and produce varying outputs.

Two functions change significantly between client- and server-side rendered apps: routing and code splitting. We take a closer look at these below.

Client-side Rendering (CSR)

Client-side rendering is the default render path for a React SPA. The server will serve a shell app that contains no content. Once the browser downloads, parses, and executes included JavaScript sources, the HTML content is populated or rendered.

The routing function is handled by the client app by managing the browser history. This means that the same HTML file is served irrespective of which route was requested, and the client updates its view state after it is rendered.

Code splitting is relatively straightforward. You can split your code using dynamic imports or React.lazy. Split it so that only needed dependencies are loaded based on route or user actions.

If the page needs to fetch data from the server to render content—say, a blog title or product description—it can do so only when the relevant components are mounted and rendered.

The user will most likely see a “Loading data” sign or indicator while the website fetches additional data.

Client-side Rendering With Bootstrapped Data (CSRB)

Consider the same scenario as CSR but instead of fetching data after the DOM is rendered, let’s say that the server sent relevant data bootstrapped inside served HTML.

We could include a node that looks something like this:

<script id="data" type="application/json">

{"title": "My blog title", "comments":["comment 1","comment 2"]}

</script>

And parse it when the component mounts:

var data = JSON.parse(document.getElementById('data').innerHTML);

We just saved ourselves a round trip to the server. We will see the trade-offs in a bit.

Server-side Rendering to Static Content (SSRS)

Imagine a scenario in which we need to generate HTML on the fly.

If we are building an online calculator and the user issues a query of the sort /calculate/34+15 (leaving out URL escaping) we need to process the query, evaluate the result, and respond with generated HTML.

Our generated HTML is quite simple in structure and we don’t need React to manage and manipulate the DOM once the generated HTML is served.

So we are just serving HTML and CSS content. You can use the renderToStaticMarkup method to achieve this.

The routing will be entirely handled by the server as it needs to recompute HTML for each result, although CDN caching can be used to serve responses faster. CSS files can also be cached by the browser for faster subsequent page loads.

Server-side Rendering With Rehydration (SSRH)

Imagine the same scenario as the one described above, but this time we need a fully functional React application on the client.

We are going to perform the first render on the server and send back HTML content along with the JavaScript files. React will rehydrate the server-rendered markup and the application will behave like a CSR application from this point on.

React provides built-in methods to perform these actions.

The first request is handled by the server and subsequent renders are handled by the client. Therefore, such apps are called universal React apps (rendered on both server and client). The code to handle routing may be split (or duplicated) on client and server.

Code splitting is also a bit tricky since ReactDOMServer does not support React.lazy, so you may have to use something like Loadable Components.

It should also be noted that ReactDOMServer only performs a shallow render. In other words, although the render method for your components are invoked, the life-cycle methods like componentDidMount are not called. So you need to refactor your code to provide data to your components using an alternate method.

This is where frameworks like NextJS make an appearance. They mask the complexities associated with routing and code splitting in SSRH and provide a smoother developer experience. This approach yields mixed results when it comes to page performance though.

Pre-rendering to Static Content (PRS)

What if we could render a webpage before a user requests it? This could be done at build time or dynamically when the data changes.

We can then cache the resulting HTML content on a CDN and serve it much faster when a user requests it.

Pre-rendering is the rendering of content before a user request. This approach can be used for blogs and e-commerce applications since their content typically does not depend on data supplied by the user.

Pre-rendering With Rehydration (PRH)

We may want our pre-rendered HTML to be a fully functional React app when a client renders it.

After the first request is served, the application will behave like a standard React app. This mode is similar to SSRH, in terms of routing and code-splitting functions.

Performance Matrix

The moment you have been waiting for has arriv[ed. It’s time for a showdown. Let’s look at how each of these rendering paths affects web performance metrics and determine the winner.

In this matrix, we assign a score to each rendering path based on how well it does in a performance metric.

The score ranges from 1 to 5:

1 = Unsatisfactory 2 = Poor 3 = Moderate 4 = Good 5 = Excellent

| TTFB Time to first byte | LCP Largest contentful paint | TTI Time to interactive | Bundle Size | Total | |

|---|---|---|---|---|---|

| CSR | 5 HTML can be cached on a CDN | 1 Multiple trips to the server to fetch HTML and data | 2 Data fetching + JS execution delays | 2 All JS dependencies need to be loaded before render | 10 |

| CSRB | 4 HTML can be cached given it does not depend on request data | 3 Data is loaded with application | 3 JS must be fetched, parsed, and executed before interactive | 2 All JS dependencies need to be loaded before render | 12 |

| SSRS | 3 HTML is generated on each request and not cached | 5 No JS payload or async operations | 5 Page is interactive immediately after first paint | 5 Contains only essential static content | 18 |

| SSRH | 3 HTML is generated on each request and not cached | 4 First render will be faster because the server rendered the first pass | 2 Slower because JS needs to hydrate DOM after first HTML parse + paint | 1 Rendered HTML + JS dependencies need to be downloaded | 10 |

| PRS | 5 HTML is cached on a CDN | 5 No JS payload or async operations | 5 Page is interactive immediately after first paint | 5 Contains only essential static content | 20 |

| PRH | 5 HTML is cached on a CDN | 4 First render will be faster because the server rendered the first pass | 2 Slower because JS needs to hydrate DOM after first HTML parse + paint | 1 Rendered HTML + JS dependencies need to be downloaded | 12 |

Key Takeaways

Pre-rendering to static content (PRS) leads to highest-performing websites while server-side rendering with hydration (SSRH) or client-side rendering (CSR) may lead to underwhelming results.

It’s also possible to adopt multiple approaches for different parts of the website. For example, these performance metrics may be critical for public-facing webpages so that they can be indexed more efficiently, while they may matter less once a user has logged in and sees private account data.

Each render path has trade-offs depending on where and how you want to process your data. The important thing is that an engineering team is able to clearly see and discuss these trade-offs and choose an architecture that maximizes the happiness of its users.

Additional Resources and Considerations

While I tried to cover the currently popular techniques, this is not an exhaustive analysis. I highly recommend reading this article in which developers from Google discuss other advanced techniques like streaming server rendering, trisomorphic rendering, and dynamic rendering (serving different responses to crawlers and users).

Other factors to consider while building content-heavy websites include the need for a good content management system (CMS) for your authors, and the ability to easily generate/modify social media previews and optimize images for varying screen sizes.

Further Reading on the Toptal Blog:

- Using RTK Query in React Apps With Redux Toolkit

- Straightforward React UI Testing

- The Best React State Management Tools for Enterprise Applications

- React Test-driven Development: From User Stories to Production

- A Complete Guide to Testing React Hooks

- Working With React Hooks and TypeScript

- Reusable State Management With RxJS, React, and Custom Libraries

- Next.js vs. React: A Comparative Tutorial

Understanding the basics

Is React good for SEO?

React is a JavaScript framework developed to build interactive and modular UIs. SEO is not a design goal of React, but content websites built with React can be optimized to achieve better indexing and ranking.

Why should I care about SEO optimizing my React app?

Not all React apps need to be SEO-optimized. If you are building a content-heavy website on React—a real estate listings website, an e-commerce website, or a blog—you can benefit by optimizing your website for better rankings.

What is the difference between a single page application (SPA) and a website?

A single page application serves an app shell (empty HTML), which is then populated or rendered by JavaScript. All subsequent navigations only fetch relevant views and data while the app shell stays the same. A conventional website serves meaningful HTML content, which is then made interactive by JavaScript. All subsequent navigations load an entirely new webpage.

Is server-side rendering better than client-side rendering?

It depends. For example, server-side rendering of product pages for customer-facing e-commerce pages may lead to better rankings and conversion rates, although SSR may not be required for seller-facing product upload pages. Most large-scale websites would benefit from a hybrid approach.

Singapore, Singapore

Member since January 7, 2019

About the author

Vineet specializes in building data visualization interfaces and has used React extensively in his projects.